문제1

Take a backup of the etcd cluster and save it to /opt/etcd-backup.db.

[나의 풀이]

etcdctl --help

#etcdctl이 설치되어있지 않다면 설치

sudo apt update

sudo apt install etcd-client

# 환경변수 설정

export ETCDCTL_API=3

# etcd 백업 진행

etcdctl --cacert=옵션값 \

--cert=옵션값 \

--key=옵션값 \

snapshot save 백업파일경로 및 파일명

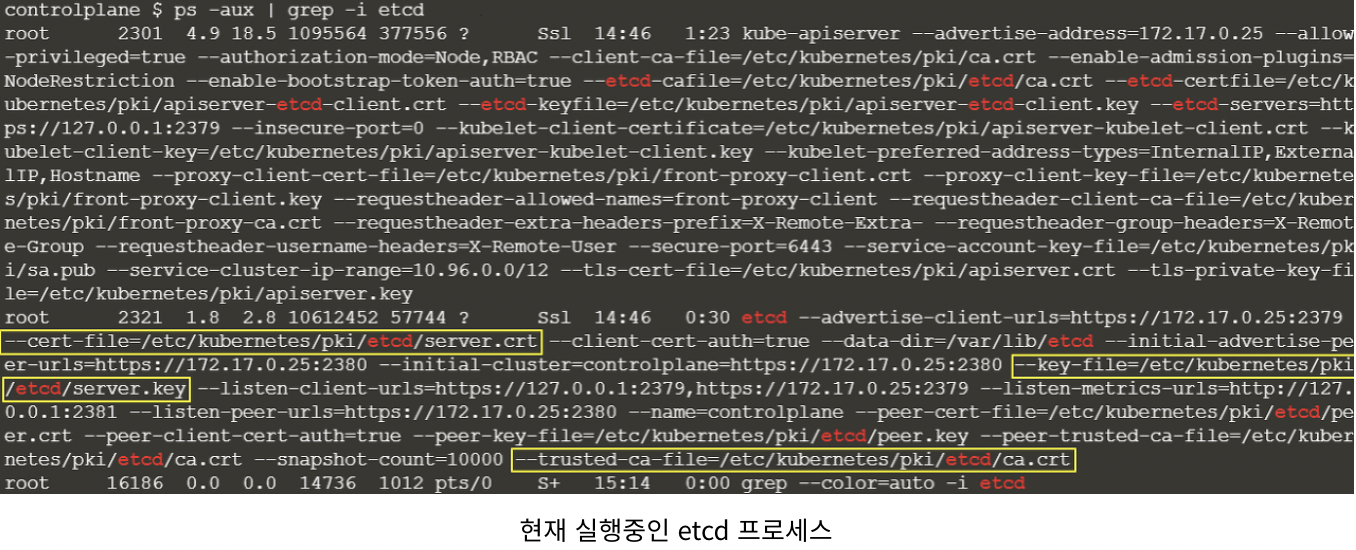

# 3가지 옵션값 위치 찾는 법

ps -aux | grep -i etcd

etcdctl --cacert=/etc/kubernetes/pli/etcd/ca.cert \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot save /opt/etcdbackup

문제2

Create a Pod called redis-storage with image: redis:alpine with a Volume of type emptyDir that lasts for the life of the Pod.

Specs on the below.

- Pod named 'redis-storage' created

- Pod 'redis-storage' uses Volume type of emptyDir

- Pod 'redis-storage' uses volumeMount with mountPath = /data/redis

[나의 풀이]

root@controlplane:~# cat redis-storage.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: redis-storage

name: redis-storage

spec:

containers:

- image: redis:alpine

name: redis-storage

resources: {}

volumeMounts:

- name: redis-storage

mountPath: /data/redis

volumes:

- name: redis-storage

emptyDir: {}

root@controlplane:~#

문제3

Create a new pod called super-user-pod with image busybox:1.28. Allow the pod to be able to set system_time.

The container should sleep for 4800 seconds.

- Pod: super-user-pod

- Container Image: busybox:1.28

- SYS_TIME capabilities for the conatiner?

[나의 풀이]

root@controlplane:~# cat super-user-pod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: super-user-pod

name: super-user-pod

spec:

containers:

- image: busybox:1.28

name: super-user-pod

command: ["sleep", "4800"]

securityContext:

capabilities:

add: ["SYS_TIME"]

문제4

A pod definition file is created at /root/CKA/use-pv.yaml. Make use of this manifest file and mount the persistent volume called pv-1. Ensure the pod is running and the PV is bound.

mountPath: /data

persistentVolumeClaim Name: my-pvc

- persistentVolume Claim configured correctly

- pod using the correct mountPath

- pod using the persistent volume claim?

[나의 풀이]

root@controlplane:~# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-1 10Mi RWO Retain Available 7m20s

root@controlplane:~# cat my-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 10Mi

root@controlplane:~# cat CKA/use-pv.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: use-pv

name: use-pv

spec:

volumes:

- name: my-pvc

persistentVolumeClaim:

claimName: my-pvc

containers:

- image: nginx

name: use-pv

volumeMounts:

- mountPath: "/data"

name: my-pvc

문제5

Create a new deployment called nginx-deploy, with image nginx:1.16 and 1 replica. Next upgrade the deployment to version 1.17 using rolling update.

- Deployment : nginx-deploy. Image: nginx:1.16

- Image: nginx:1.16

- Task: Upgrade the version of the deployment to 1:17

- Task: Record the changes for the image upgrade

[나의 풀이]

root@controlplane:~# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy

name: nginx-deploy

spec:

replicas: 1

selector:

matchLabels:

app: nginx-deploy

template:

metadata:

labels:

app: nginx-deploy

spec:

containers:

- image: nginx:1.16

name: nginx

root@controlplane:~# kubectl create -f nginx-deploy.yaml

deployment.apps/nginx-deploy created

root@controlplane:~# kubectl set image deployment.apps/nginx-deploy nginx=nginx:1.17 --record

deployment.apps/nginx-deploy image updated

문제6

Create a new user called john. Grant him access to the cluster. John should have permission to create, list, get, update and delete pods in the development namespace . The private key exists in the location: /root/CKA/john.key and csr at /root/CKA/john.csr.

Important Note: As of kubernetes 1.19, the CertificateSigningRequest object expects a signerName.

Please refer the documentation to see an example. The documentation tab is available at the top right of terminal.

- CSR: john-developer Status:Approved

- Role Name: developer, namespace: development, Resource: Pods

- Access: User 'john' has appropriate permissions

[나의 풀이]

oot@controlplane:~# cat john-csr.yaml apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: john-developer

spec:

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1ZEQ0NBVHdDQVFBd0R6RU5NQXNHQTFVRUF3d0VhbTlvYmpDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRApnZ0VQQURDQ0FRb0NnZ0VCQU1TRXhhdVhldXRoRnRFTlhzcmNwclpWcXRUM2NFVWFPUVhVQ3pZaGczK254R0IvCkRVOW1lTDA4M0FGUFBhWTM2c0hoY21WbU5iZjZqaXlMVWRTUnVCYlN0TWk4Wko3aGN1eWhodWFmanZCOEtsZDkKellNS1pyODRsaHYwUDdyMUltSEQ3OTI5bmNTUitIZFFhT0VHaDlVOWpUSUJIVEdlY3QzalhwVi9XcFRuMHVUegpNNDZCU1NoRUI0MlFSMXZVSGc5Ukd2ck82djlzVVF1ZVhKeVRVUGtDV2ZJQXB0OFdzNFZwSER5U2tVK09UbTJkCk4ra0MyQjE4K1RUMWVHK2xvbkkvS0hRcFdmUzY5SEVOVnJ1VGcranA5QW92VUFTbEZZaW1BQU5uUUJDVnZORmkKTjlReFcvaisvRk8yQkdINWRCOEFTSVhPdFpqcndQS2UvMnRpL0ljQ0F3RUFBYUFBTUEwR0NTcUdTSWIzRFFFQgpDd1VBQTRJQkFRQnllcjBrN2FrWE9NZ01vWkUwMThjYmxlendxMVFyY0x2eFhDYjlrTlpsTmNINUMyWFUyelRiCnZITGo3VUUxVFM1eTRlOTdJZXJrcm5QMUxTVUJ5d0xrU1Y4RWpiR2VWK0FHNEsxNXhaMUVlTFlUamJ1cVFZQksKV2hlcU80SHVaWGt3ZDNNRWlZTlVxaXRxaVd5Rm9nSDBMcDF4UzNIVjNYUGF3UDIzUkI1OUNQMHZXZzk2ZmhXRgo0eGkxck9PeGlkcjM3RFowZVZTbUlzWEJVazVtVFNVbkYzSjJ2K3J0Zkc4UlBQb2dQZlBBeVZlWUZUZ3pjNnI4CmtoVThkU2xJK3E5MmZiWUloVitLTTlCSHNVNzh1bXRMK3BzVUpncjE3Z1NMR2dybXRWV0UvRmRIZVNraW5TdG0Kc2dvbGtFWTEwd3JHbWt1OEJSclNaQUVObVdWcFQyWWIKLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==

signerName: kubernetes.io/kube-apiserver-client

usages:

- client auth

root@controlplane:~# kubectl certificate approve john-developer

certificatesigningrequest.certificates.k8s.io/john-developer approved

root@controlplane:~# kubectl create role developer --verb=create --verb=get --verb=list --verb=update --verb=delete --resource=pods -n development

role.rbac.authorization.k8s.io/developer created

root@controlplane:~# kubectl create rolebinding developer-binding-myuser --role=developer --user=john -n development

rolebinding.rbac.authorization.k8s.io/developer-binding-myuser created

kubectl -n development describe rolebindings.rbac.authorization.k8s.io developer-role-binding

kubectl auth can-i update pods --namespace=development --as=john

정답

Solution manifest file to create a CSR as follows:

---

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: john-developer

spec:

signerName: kubernetes.io/kube-apiserver-client

request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ1ZEQ0NBVHdDQVFBd0R6RU5NQXNHQTFVRUF3d0VhbTlvYmpDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRApnZ0VQQURDQ0FRb0NnZ0VCQUt2Um1tQ0h2ZjBrTHNldlF3aWVKSzcrVVdRck04ZGtkdzkyYUJTdG1uUVNhMGFPCjV3c3cwbVZyNkNjcEJFRmVreHk5NUVydkgyTHhqQTNiSHVsTVVub2ZkUU9rbjYra1NNY2o3TzdWYlBld2k2OEIKa3JoM2prRFNuZGFvV1NPWXBKOFg1WUZ5c2ZvNUpxby82YU92czFGcEc3bm5SMG1JYWpySTlNVVFEdTVncGw4bgpjakY0TG4vQ3NEb3o3QXNadEgwcVpwc0dXYVpURTBKOWNrQmswZWhiV2tMeDJUK3pEYzlmaDVIMjZsSE4zbHM4CktiSlRuSnY3WDFsNndCeTN5WUFUSXRNclpUR28wZ2c1QS9uREZ4SXdHcXNlMTdLZDRaa1k3RDJIZ3R4UytkMEMKMTNBeHNVdzQyWVZ6ZzhkYXJzVGRMZzcxQ2NaanRxdS9YSmlyQmxVQ0F3RUFBYUFBTUEwR0NTcUdTSWIzRFFFQgpDd1VBQTRJQkFRQ1VKTnNMelBKczB2czlGTTVpUzJ0akMyaVYvdXptcmwxTGNUTStsbXpSODNsS09uL0NoMTZlClNLNHplRlFtbGF0c0hCOGZBU2ZhQnRaOUJ2UnVlMUZnbHk1b2VuTk5LaW9FMnc3TUx1a0oyODBWRWFxUjN2SSsKNzRiNnduNkhYclJsYVhaM25VMTFQVTlsT3RBSGxQeDNYVWpCVk5QaGhlUlBmR3p3TTRselZuQW5mNm96bEtxSgpvT3RORStlZ2FYWDdvc3BvZmdWZWVqc25Yd0RjZ05pSFFTbDgzSkljUCtjOVBHMDJtNyt0NmpJU3VoRllTVjZtCmlqblNucHBKZWhFUGxPMkFNcmJzU0VpaFB1N294Wm9iZDFtdWF4bWtVa0NoSzZLeGV0RjVEdWhRMi80NEMvSDIKOWk1bnpMMlRST3RndGRJZjAveUF5N05COHlOY3FPR0QKLS0tLS1FTkQgQ0VSVElGSUNBVEUgUkVRVUVTVC0tLS0tCg==

usages:

- digital signature

- key encipherment

- client auth

groups:

- system:authenticated

To approve this certificate, run: kubectl certificate approve john-developer

Next, create a role developer and rolebinding developer-role-binding, run the command:

$ kubectl create role developer --resource=pods --verb=create,list,get,update,delete --namespace=development

$ kubectl create rolebinding developer-role-binding --role=developer --user=john --namespace=development

To verify the permission from kubectl utility tool:

$ kubectl auth can-i update pods --as=john --namespace=development문제7

Create a nginx pod called nginx-resolver using image nginx, expose it internally with a service called nginx-resolver-service. Test that you are able to look up the service and pod names from within the cluster. Use the image: busybox:1.28 for dns lookup. Record results in /root/CKA/nginx.svc and /root/CKA/nginx.pod

- Pod: nginx-resolver created

- Service DNS Resolution recorded correctly

- Pod DNS resolution recorded correctly

[나의 풀이]

root@controlplane:~# kubectl run nginx-resolver --image=nginx

pod/nginx-resolver created

root@controlplane:~# kubectl expose pod nginx-resolver --name=nginx-resolver-service --target-port=80 --port=80

service/nginx-resolver-service exposed

root@controlplane:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4m21s

nginx-resolver-service ClusterIP 10.111.116.48 <none> 80/TCP 4s

root@controlplane:~# kubectl run test-nslookup --image=busybox:1.28 --restart=Never --rm -it -- nslookup nginx-resolver-service > /root/CKA/nginx.svc

root@controlplane:~# kubectl run test-nslookup --image=busybox:1.28 --restart=Never --rm -it -- nslookup 10-50-192-1.default.pod > /root/CKA/nginx.pod

[정답]

Use the command kubectl run and create a nginx pod and busybox pod. Resolve it, nginx service and its pod name from busybox pod.

To create a pod nginx-resolver and expose it internally:

kubectl run nginx-resolver --image=nginx

kubectl expose pod nginx-resolver --name=nginx-resolver-service --port=80 --target-port=80 --type=ClusterIP

To create a pod test-nslookup. Test that you are able to look up the service and pod names from within the cluster:

kubectl run test-nslookup --image=busybox:1.28 --rm -it --restart=Never -- nslookup nginx-resolver-service

kubectl run test-nslookup --image=busybox:1.28 --rm -it --restart=Never -- nslookup nginx-resolver-service > /root/CKA/nginx.svc

Get the IP of the nginx-resolver pod and replace the dots(.) with hyphon(-) which will be used below.

kubectl get pod nginx-resolver -o wide

kubectl run test-nslookup --image=busybox:1.28 --rm -it --restart=Never -- nslookup <P-O-D-I-P.default.pod> > /root/CKA/nginx.pod

[해설]

문제풀이 영상에서는 --restart 옵션을 붙이지 않아도 정상동작이 되었지만, 실제로 --restart=Never 을 붙여줘야 정상 동작하였다.

--rm 옵션은 실행이 끝나면 자동으로 pod를 삭제하는 옵션인데 -it 옵션을 같이 붙여야 한다.

문제8

Create a static pod on node01 called nginx-critical with image nginx and make sure that it is recreated/restarted automatically in case of a failure.

Use /etc/kubernetes/manifests as the Static Pod path for example.

- static pod configured under /etc/kubernetes/manifests ?

- Pod nginx-critical-node01 is up and running

[나의 풀이]

root@node01:~# vim static-pod.yml

root@node01:~# mv static-pod.yml /etc/kubernetes/manifests/[정답]

To create a static pod called nginx-critical by using below command:

kubectl run nginx-critical --image=nginx --dry-run=client -o yaml > static.yaml

Copy the contents of this file or use scp command to transfer this file from controlplane to node01 node.

root@controlplane:~# scp static.yaml node01:/root/

To know the IP Address of the node01 node:

root@controlplane:~# kubectl get nodes -o wide

# Perform SSH

root@controlplane:~# ssh node01

OR

root@controlplane:~# ssh <IP of node01>

On node01 node:

Check if static pod directory is present which is /etc/kubernetes/manifests, if it's not present then create it.

root@node01:~# mkdir -p /etc/kubernetes/manifests

Add that complete path to the staticPodPath field in the kubelet config.yaml file.

root@node01:~# vi /var/lib/kubelet/config.yaml

now, move/copy the static.yaml to path /etc/kubernetes/manifests/.

root@node01:~# cp /root/static.yaml /etc/kubernetes/manifests/

Go back to the controlplane node and check the status of static pod:

root@node01:~# exit

logout

root@controlplane:~# kubectl get pods

'클라우드 > CKA' 카테고리의 다른 글

| [CKA] Ligtning Lab (0) | 2022.01.02 |

|---|---|

| [CKA] mock exam 03 (0) | 2021.12.26 |

| [CKA] mock exam 01 (0) | 2021.12.15 |

| [network] dns (0) | 2021.10.16 |

| [network] switching routing (0) | 2021.10.16 |

![[CKA] mock exam 02](https://img1.daumcdn.net/thumb/R750x0/?scode=mtistory2&fname=https%3A%2F%2Fblog.kakaocdn.net%2Fdn%2FAo7A1%2FbtronJPqrqj%2F5WjfArNCvxDC3hb0w9Ce1K%2Fimg.png)